Real-World Data for Physical AI

OpenGraph builds a scalable engine for real-world data collection— so robots can learn the physical world across manufacturing floors and private homes.

Real-World Data for Physical AI

OpenGraph builds a scalable engine for real-world data collection— so robots can learn the physical world across manufacturing floors and private homes.

Real-World Data for Physical AI

OpenGraph builds a scalable engine for real-world data collection— so robots can learn the physical world across manufacturing floors and private homes.

Trusted by professionals at

Trusted by professionals at

Trusted by professionals at

Data is the bottleneck in Physical AI. Physical AI is advancing rapidly —

but progress is gated by one critical resource: real-world data.

Unlike language or vision models, Physical AI can't rely on internet-scale datasets.

Every demonstration must be collected in real environments with real objects.

Data is the bottleneck in Physical AI. Physical AI is advancing rapidly —

but progress is gated by one critical resource: real-world data.

Unlike language or vision models, Physical AI can't rely on internet-scale datasets.

Every demonstration must be collected in real environments with real objects.

Data is the bottleneck in Physical AI. Physical AI is advancing rapidly —

but progress is gated by one critical resource: real-world data.

Unlike language or vision models, Physical AI can't rely on internet-scale datasets.

Every demonstration must be collected in real environments with real objects.

Technology

Purpose-built for Physical AI data

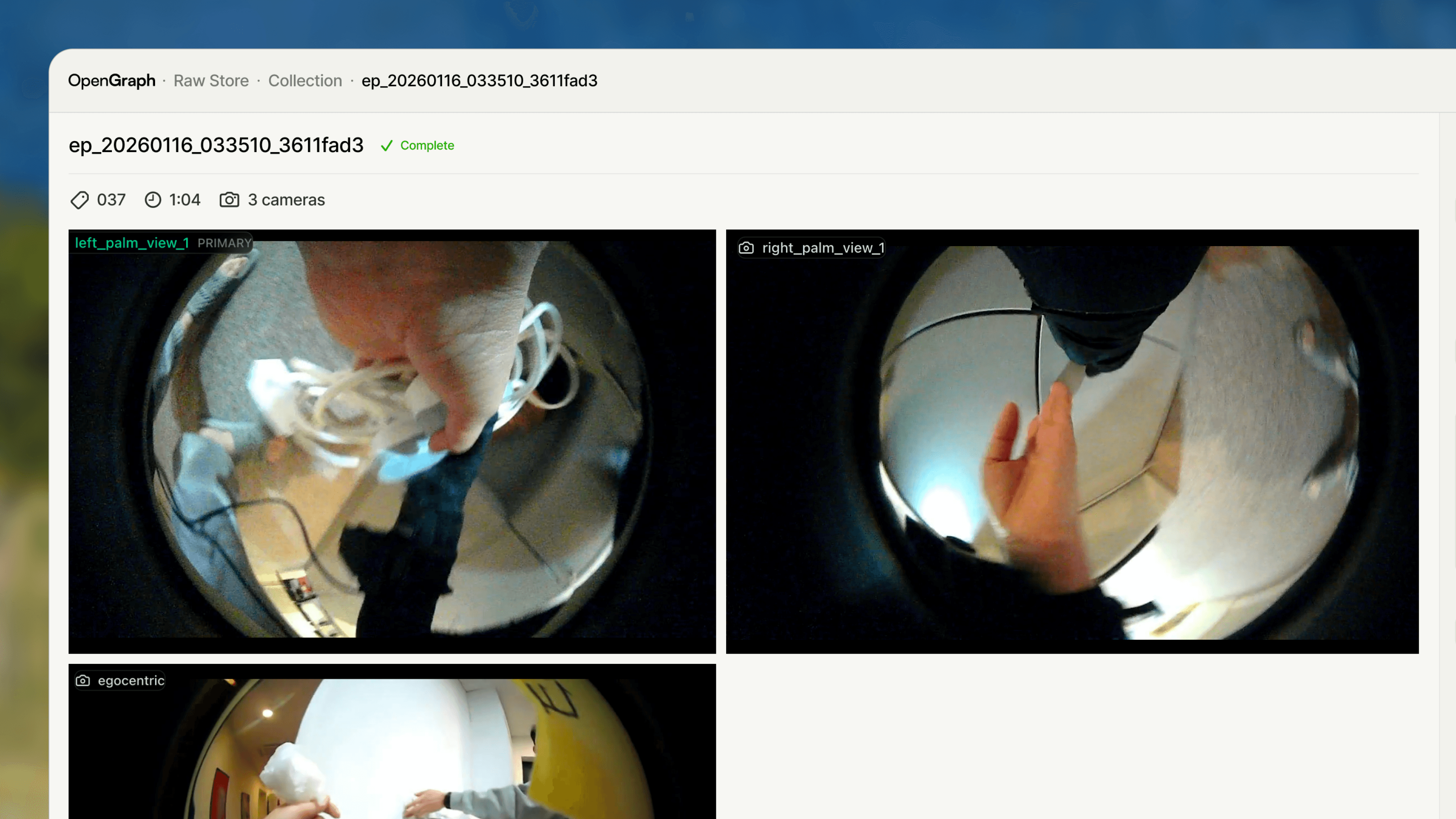

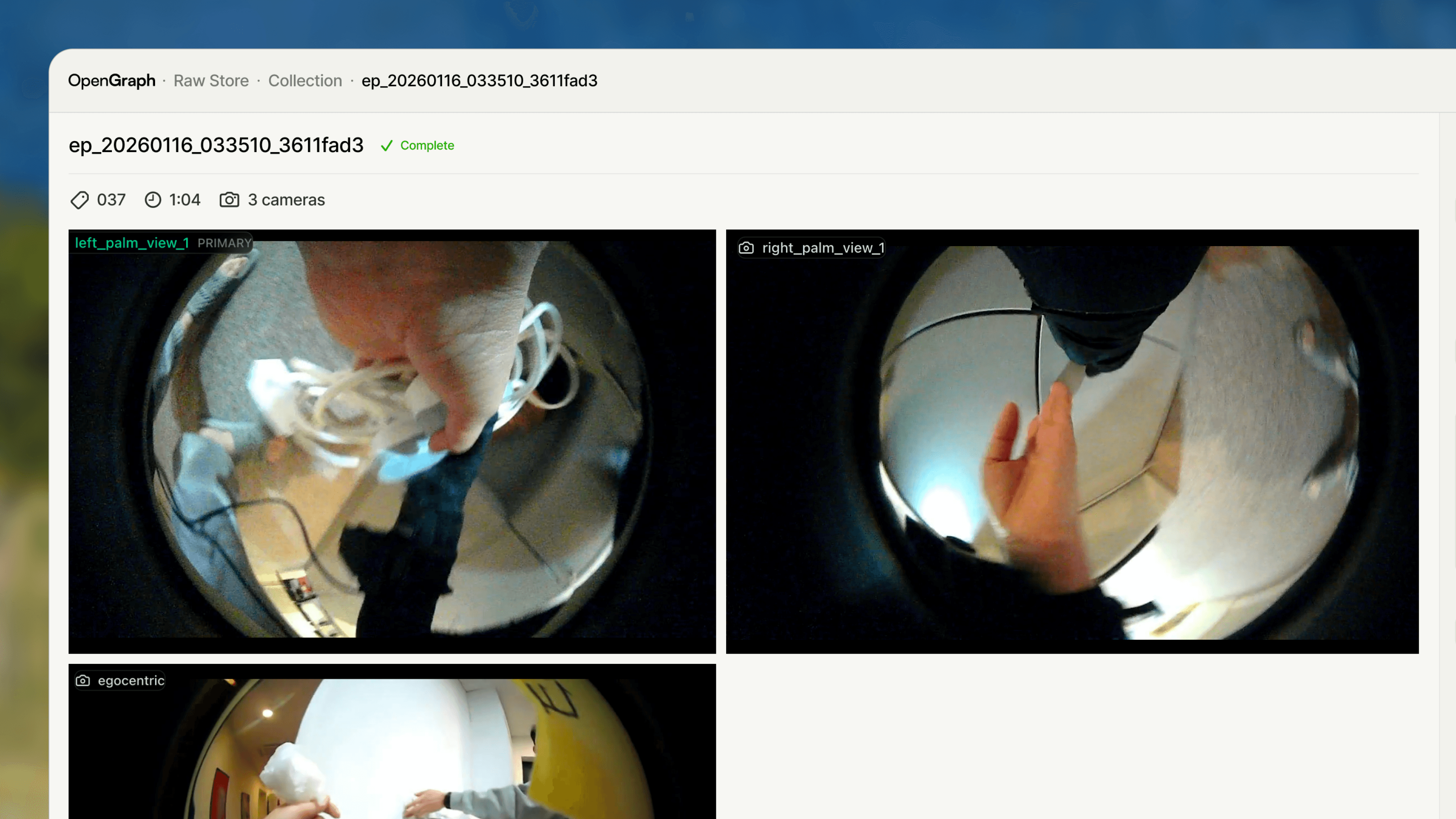

Diverse Modalities, Real Experience

We capture rich, multi-modal data with a focus on high-frequency force and tactile feedback.

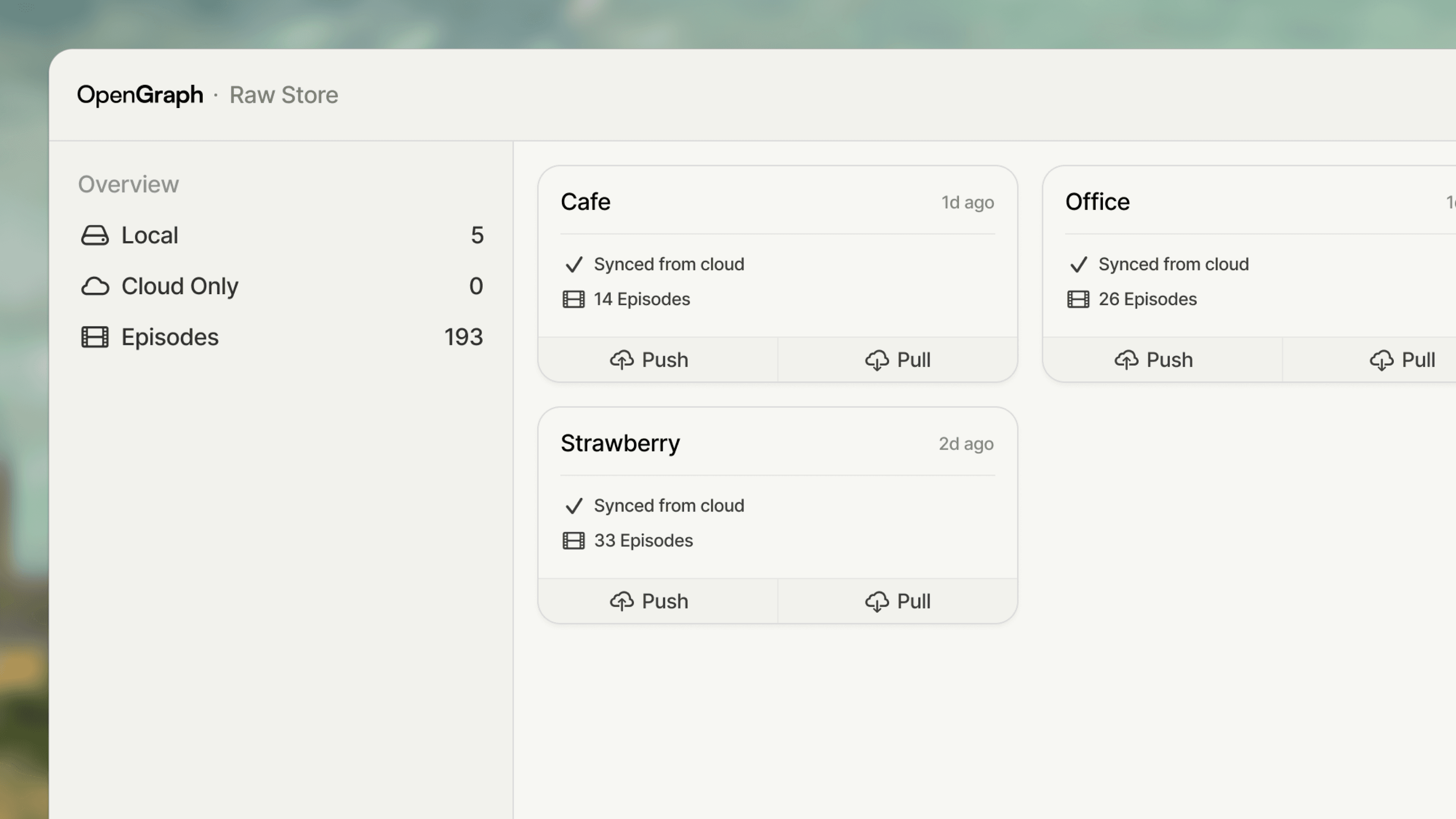

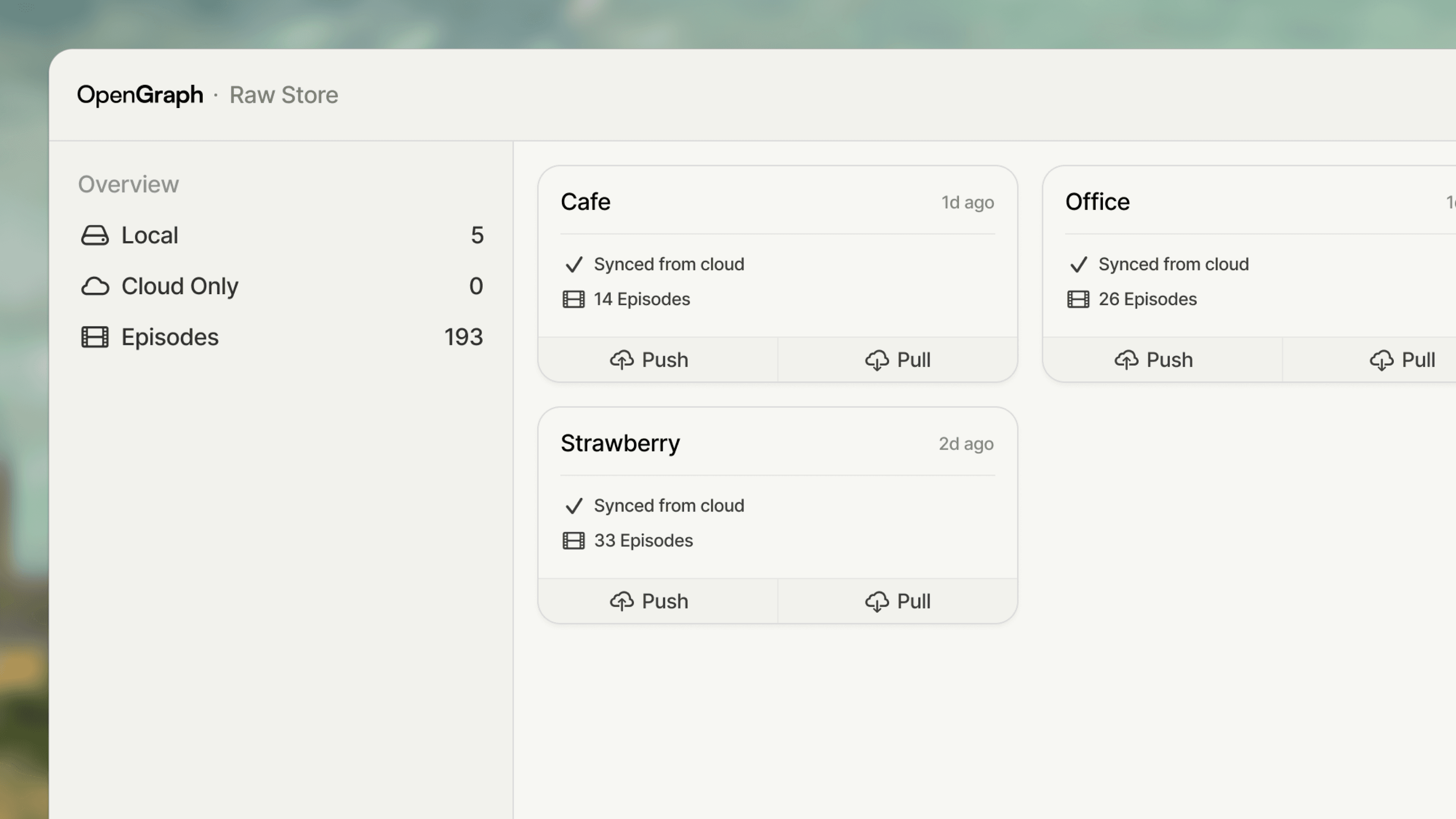

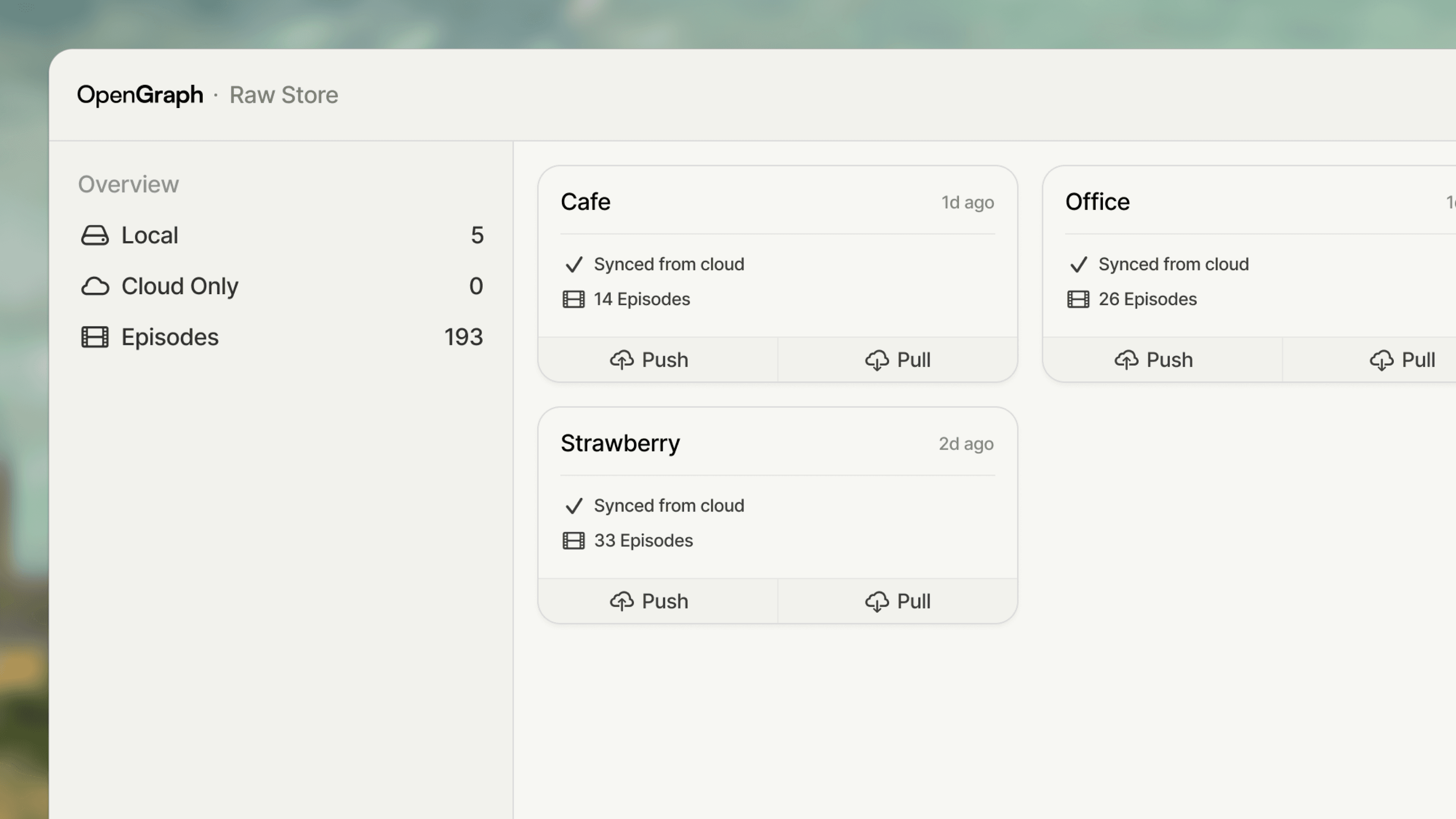

Infrastructure for Scale

We build scalable infrastructure that connects environments, operators, and hardware that compounds learning over time.

Spinning the Data Flywheel

We keep humans, robots, and data in a continuous feedback loop to drive fast, meaningful improvement.

Technology

Purpose-built for Physical AI data

Diverse Modalities, Real Experience

We capture rich, multi-modal data with a focus on high-frequency force and tactile feedback.

Infrastructure for Scale

We build scalable infrastructure that connects environments, operators, and hardware that compounds learning over time.

Spinning the Data Flywheel

We keep humans, robots, and data in a continuous feedback loop to drive fast, meaningful improvement.

Technology

Purpose-built for Physical AI data

Diverse Modalities, Real Experience

We capture rich, multi-modal data with a focus on high-frequency force and tactile feedback.

Infrastructure for Scale

We build scalable infrastructure that connects environments, operators, and hardware that compounds learning over time.

Spinning the Data Flywheel

We keep humans, robots, and data in a continuous feedback loop to drive fast, meaningful improvement.

Our setups

On-demand data collection

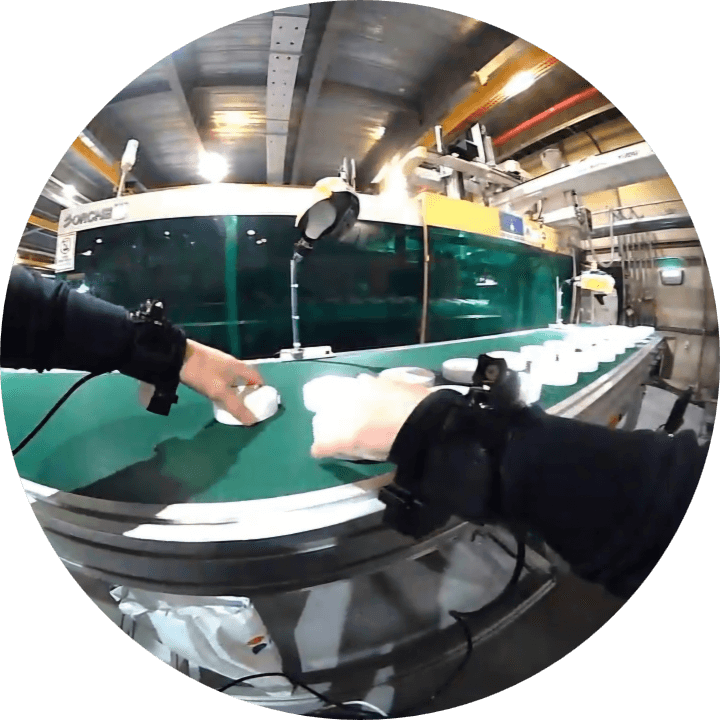

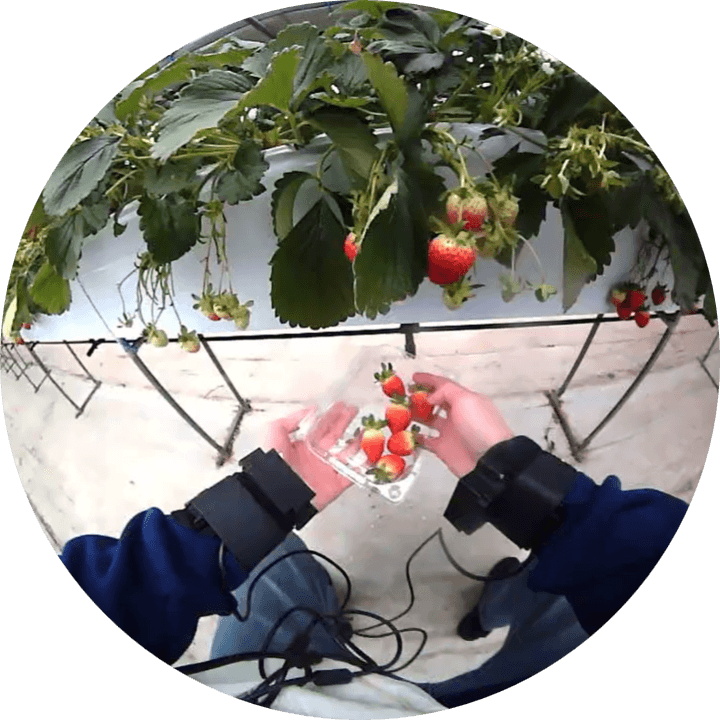

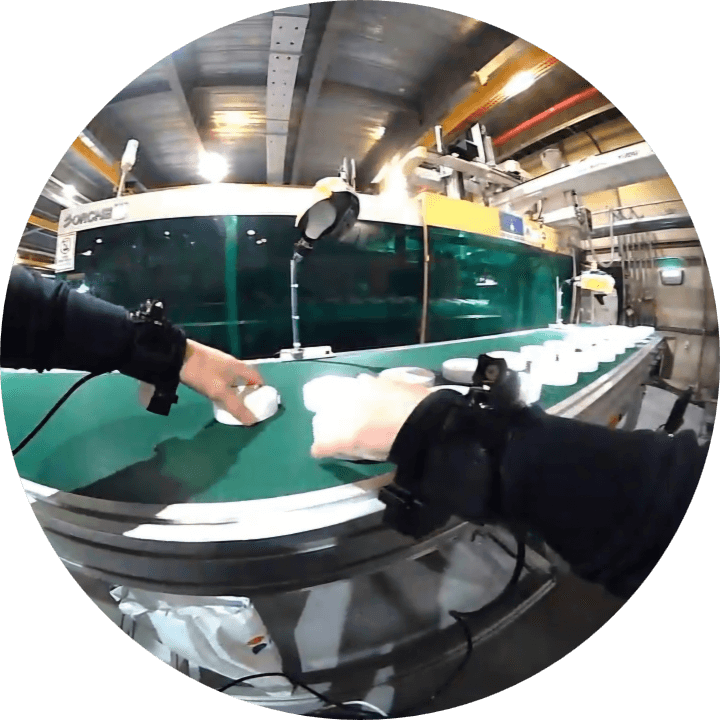

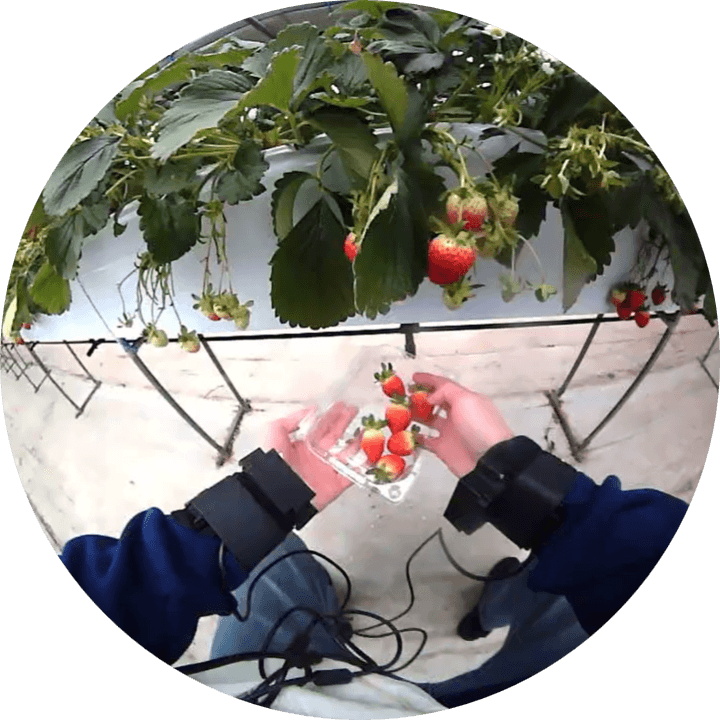

Ready-to-deploy Multimodal Acquisition

A turnkey configuration for capturing synchronized vision, pose, and contact data — optimized for your training pipeline.

Our setups

On-demand data collection

Ready-to-deploy Multimodal Acquisition

A turnkey configuration for capturing synchronized vision, pose, and contact data — optimized for your training pipeline.

Our setups

On-demand data collection

Ready-to-deploy Multimodal Acquisition

A turnkey configuration for capturing synchronized vision, pose, and contact data — optimized for your training pipeline.

Our Focus Environments

We specialize in environments that are hard to access

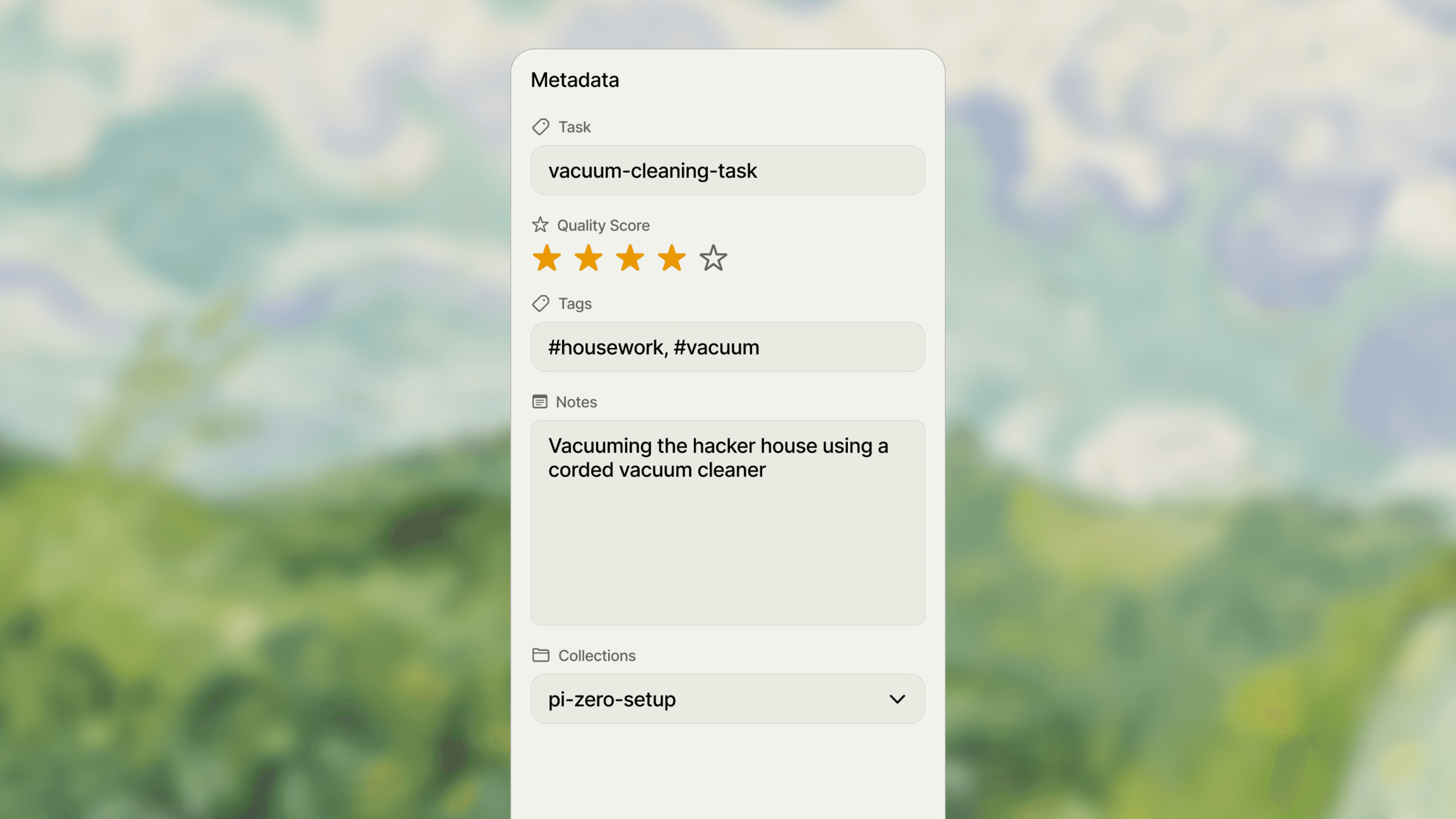

Private Homes

Private homes are the hardest real-world environments to access at scale. We collect authentic domestic interaction data through trusted, consent-based residential setups.

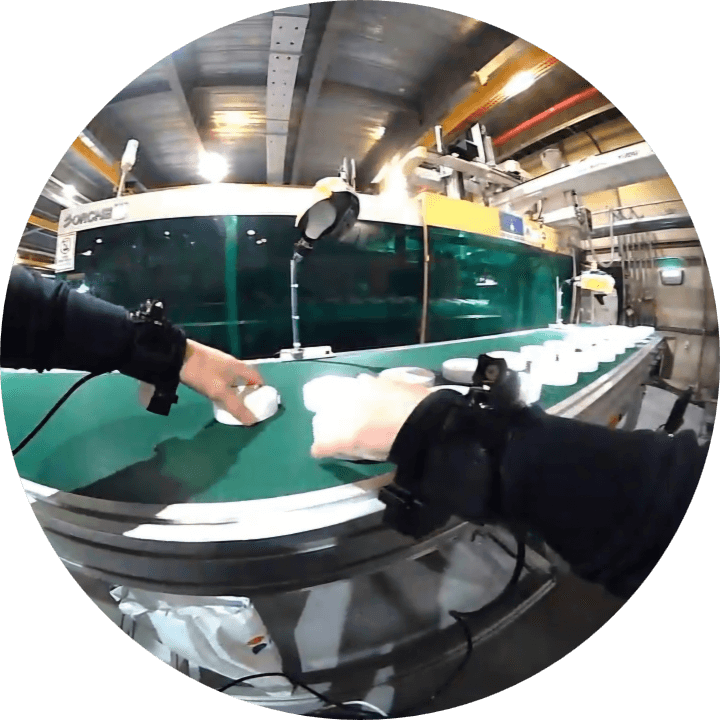

Active Manufacturing Floors

Most robotics teams lack access to live factory environments. We collect real manipulation and workflow data directly from Korea’s active manufacturing facilities.

F&B

Dynamic, semi-public environments rich in human-object interaction. We capture ordering, food handling, handover, and social behaviors in real cafés.

Office

Offices provide structured yet human-centric interaction data, capturing navigation, object use, and social context in everyday professional settings.

Logistics Warehouses

Structured environments optimized for speed, accuracy, and scale. We capture manipulation and workflow data for picking, packing, sorting in live warehouse operations.

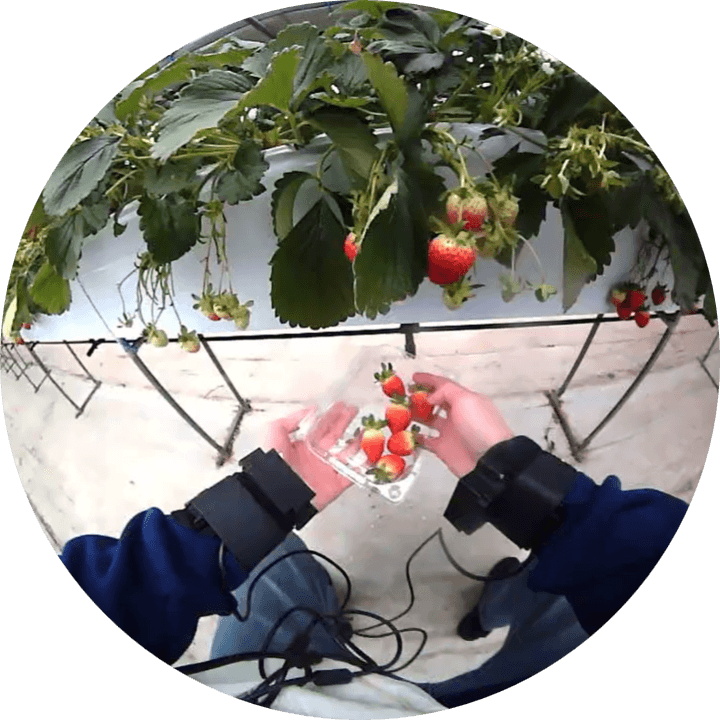

Farm

Farms and greenhouses offer seasonal, unstructured environments where robots must handle delicate objects, variable layouts, and biological processes.

Our Focus Environments

We specialize in environments that are hard to access

Private Homes

Private homes are the hardest real-world environments to access at scale. We collect authentic domestic interaction data through trusted, consent-based residential setups.

Active Manufacturing Floors

Most robotics teams lack access to live factory environments. We collect real manipulation and workflow data directly from Korea’s active manufacturing facilities.

F&B

Dynamic, semi-public environments rich in human-object interaction. We capture ordering, food handling, handover, and social behaviors in real cafés.

Office

Offices provide structured yet human-centric interaction data, capturing navigation, object use, and social context in everyday professional settings.

Logistics Warehouses

Structured environments optimized for speed, accuracy, and scale. We capture manipulation and workflow data for picking, packing, sorting in live warehouse operations.

Farm

Farms and greenhouses offer seasonal, unstructured environments where robots must handle delicate objects, variable layouts, and biological processes.

Our Focus Environments

We specialize in environments that are hard to access

Private Homes

Private homes are the hardest real-world environments to access at scale. We collect authentic domestic interaction data through trusted, consent-based residential setups.

Active Manufacturing Floors

Most robotics teams lack access to live factory environments. We collect real manipulation and workflow data directly from Korea’s active manufacturing facilities.

F&B

Dynamic, semi-public environments rich in human-object interaction. We capture ordering, food handling, handover, and social behaviors in real cafés.

Office

Offices provide structured yet human-centric interaction data, capturing navigation, object use, and social context in everyday professional settings.

Logistics Warehouses

Structured environments optimized for speed, accuracy, and scale. We capture manipulation and workflow data for picking, packing, sorting in live warehouse operations.

Farm

Farms and greenhouses offer seasonal, unstructured environments where robots must handle delicate objects, variable layouts, and biological processes.

Why OpenGraph?

End-to-End Pipeline

01

Instrument & Capture

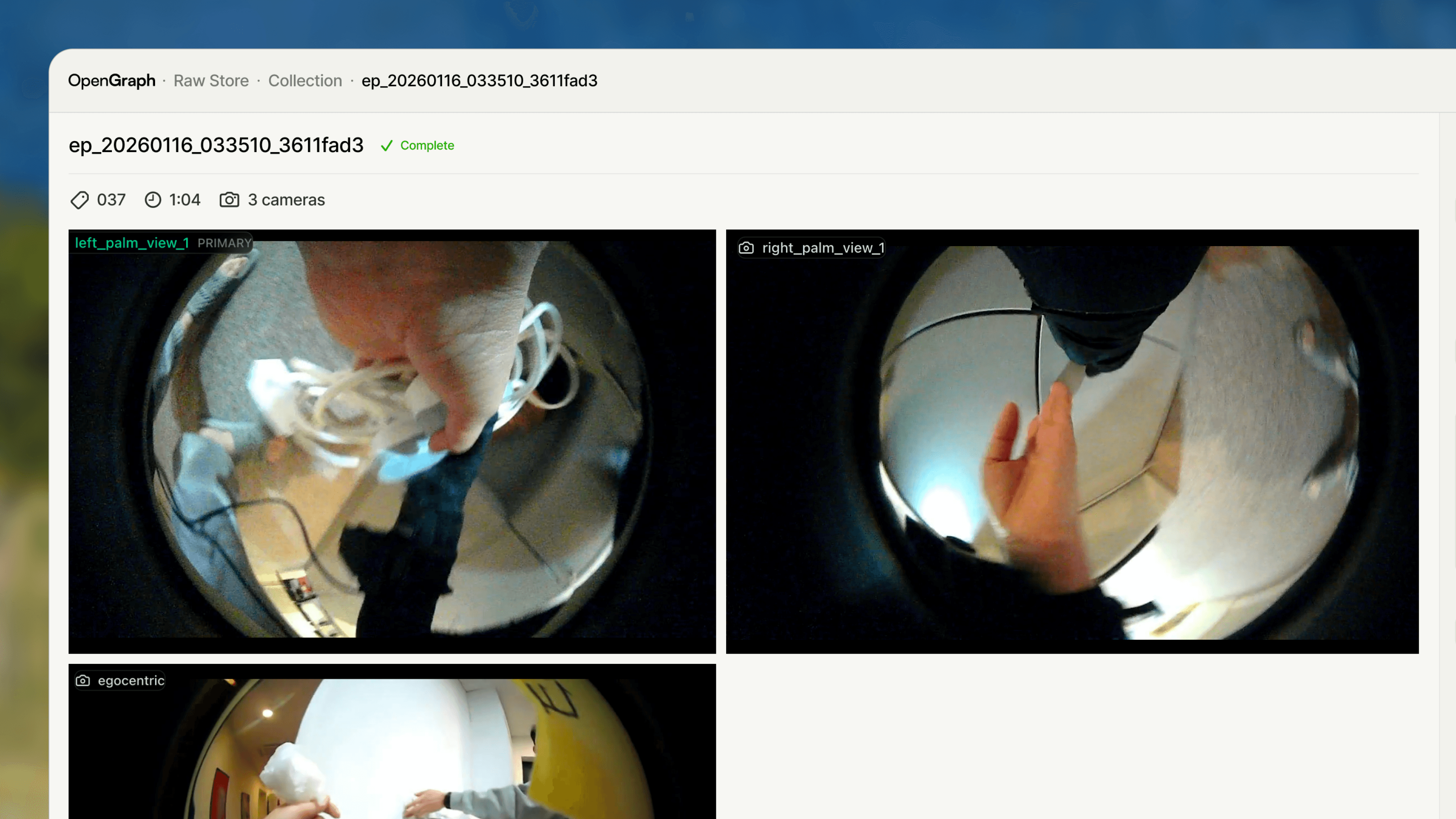

We deploy egocentric, multi-view, and task-specific sensing setups to capture manipulation data in real environments, without constraining natural human motion.

02

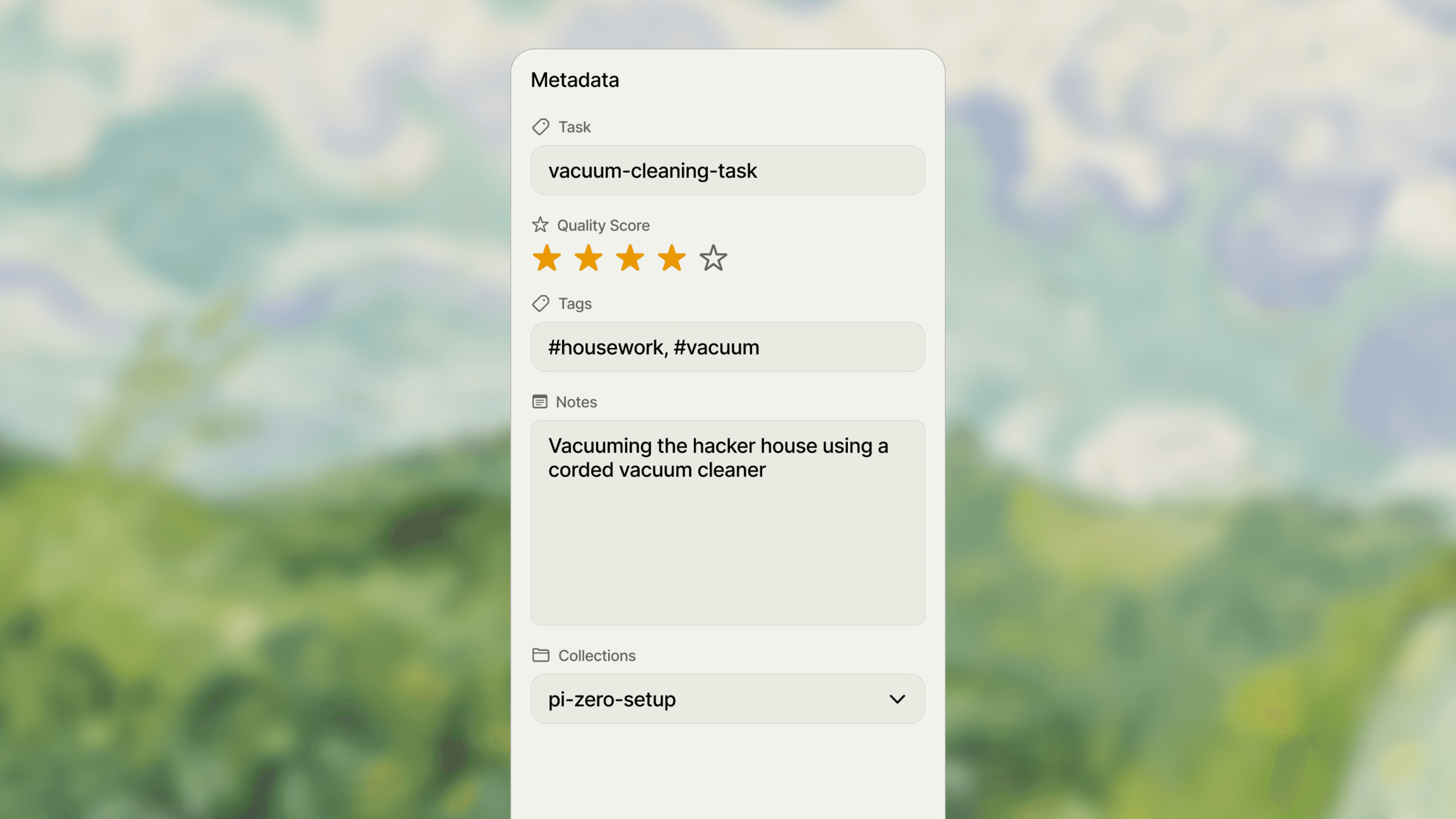

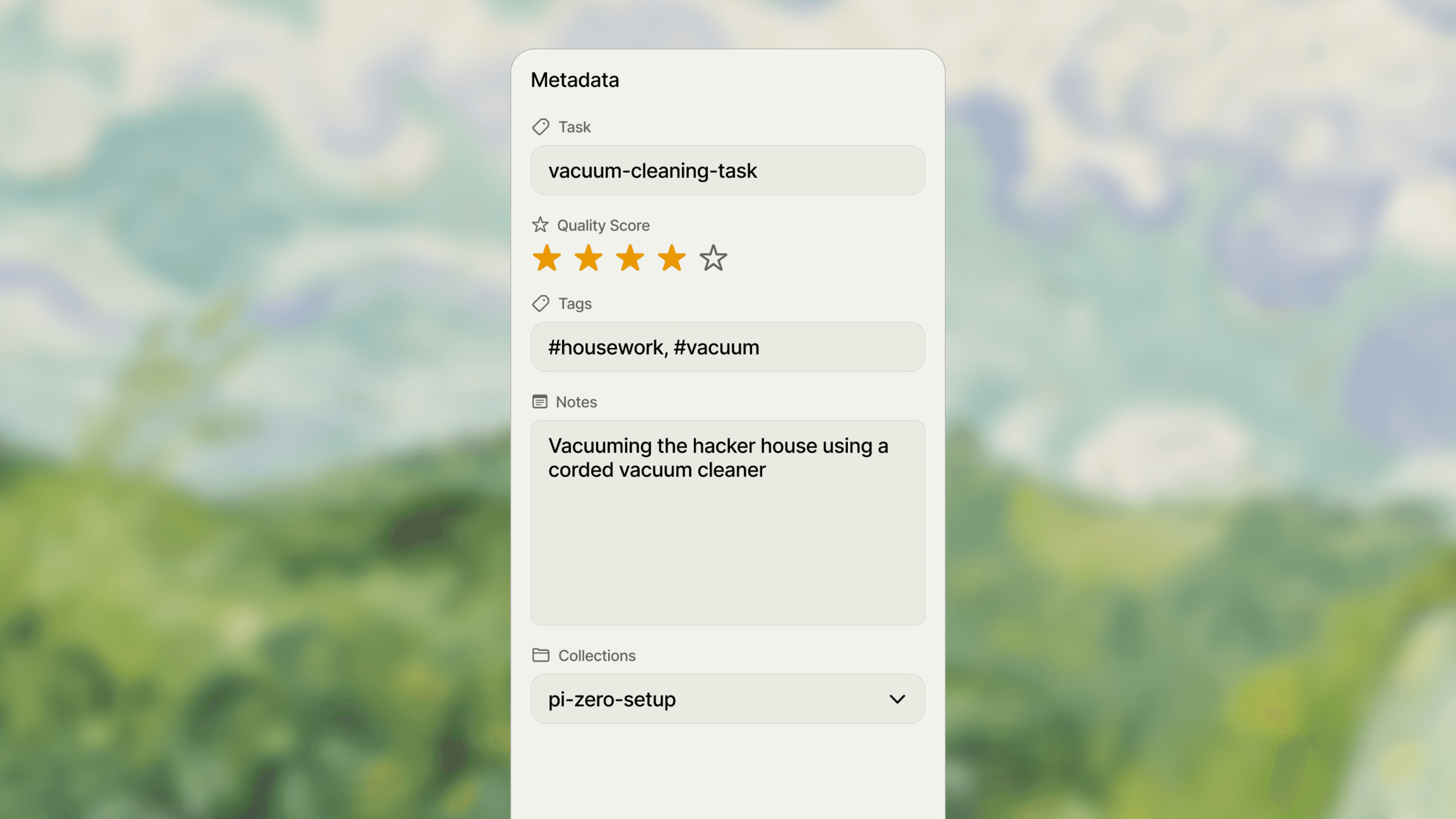

Episode Structuring

We structure raw recordings into task-complete, temporally aligned episodes with action boundaries, making them directly usable for robot training.

03

Manipulation-Aware Validation

We validate each episode based on task completion, interaction quality, and failure modes, filtering out noisy or non-causal demonstrations.

Why OpenGraph?

End-to-End Pipeline

01

Instrument & Capture

We deploy egocentric, multi-view, and task-specific sensing setups to capture manipulation data in real environments, without constraining natural human motion.

02

Episode Structuring

We structure raw recordings into task-complete, temporally aligned episodes with action boundaries, making them directly usable for robot training.

03

Manipulation-Aware Validation

We validate each episode based on task completion, interaction quality, and failure modes, filtering out noisy or non-causal demonstrations.

Why OpenGraph?

End-to-End Pipeline

01

Instrument & Capture

We deploy egocentric, multi-view, and task-specific sensing setups to capture manipulation data in real environments, without constraining natural human motion.

02

Episode Structuring

We structure raw recordings into task-complete, temporally aligned episodes with action boundaries, making them directly usable for robot training.

03

Manipulation-Aware Validation

We validate each episode based on task completion, interaction quality, and failure modes, filtering out noisy or non-causal demonstrations.

Ready to accelerate your Physical AI development?

Let's discuss how our real-world data can fit your training pipeline.

Ready to accelerate your Physical AI development?

Let's discuss how our real-world data can fit your training pipeline.

Ready to accelerate your Physical AI development?

Let's discuss how our real-world data can fit your training pipeline.

Building the data engine for Physical AI.

© 2026 OpenGraph Labs Inc. All rights reserved.

Building the data engine for Physical AI.

© 2026 OpenGraph Labs Inc. All rights reserved.

Building the data engine for Physical AI.

© 2026 OpenGraph Labs Inc. All rights reserved.